Matrix to Matrix multiplication is a very popular algorithm to show the real performance of any Graphic card. This is by nature due to the fact that this operation is very costly in terms of computing performance as the amount of multiplications required scales with matrix size – N3 . Even a very bad and naive CUDA kernel would perform very well in comparison to any CPU implementation.

The goal of this post is to however show that GPUs have not only the main “global” memory, but other types of memory as well. Namely the shared memory. The main difference between these two is that shared memory is much much smaller and is located directly on the chip (Very similar to a Block RAM in FPGA). As a result, this memory is several times faster than global memory. This is important as some algorithm’s performance are simply bound by the overall memory throughput – so that if somehow, one is able to reduce the global memory load, the algorithm will run faster.

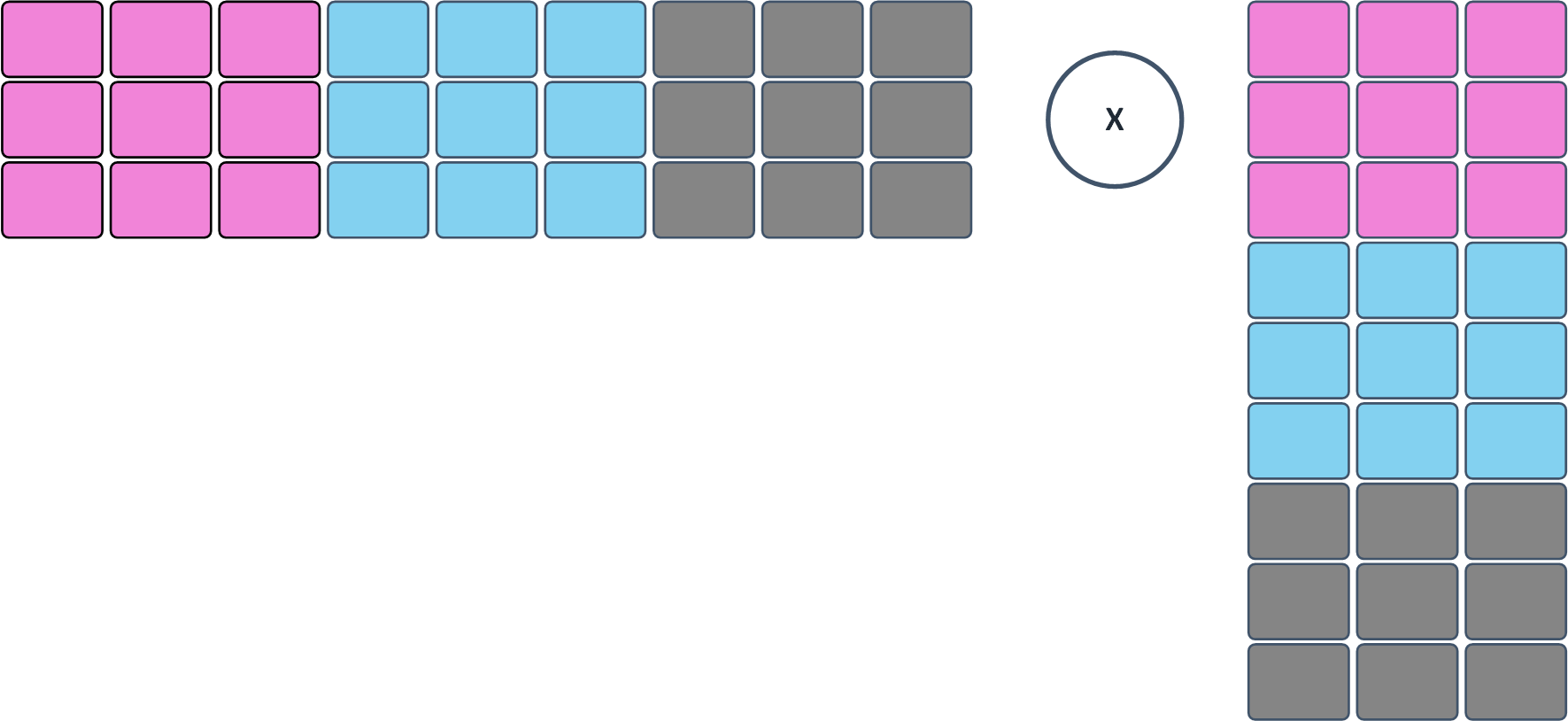

The matrix multiplication is a classical example of “Inefficient memory usage“. For 1 addition and one multiplication, we are doing 2 memory accesses and thus the compute-to-memory ratio is very small. As a result, the kernel will not have optimum performance. On the other hand matrices can be quite huge and cannot fit into the shared memory ~64KB. There are however techniques,which allow us to do some tricks and compute the MM multiplications within the shared memory. Lets first See the naive Kernel example:

/***************************************************

*@brief: Naive Matrix Matrix Multiplication in CUDA

**************************************************/

__global__ void MM_Mul_Default(int* a, int* b, int* c, int N)

{

int tix = threadIdx.x + blockDim.x * blockIdx.x;

int tiy = threadIdx.y + blockDim.y * blockIdx.y;

if ((tix < N) && (tiy < N)) {

int tid = tix + tiy * N;

int sum = 0;

for (int i = 0; i < N; i++) {

int temp1 = a[tiy * N + i]; // Row

int temp2 = b[N * i + tix]; // Col

sum += temp1 * temp2;

}

c[tid] = sum;

}

}This kernel is pretty easy to understand and probably even self-explanatory. What we can however do is to tile the operation into several pieces / phases as show below:

IE, we will compute the dot-products for each elements within chunks. How big? That depends. They must fit into the shared memory. In fact finding the right size and thread size per block is not easy and requires testing and tuning. Still, even after tuning, it will be likely tuned to a single architecture, so there is no point to tune-to-maximum unless that is clearly our goal. A very good and recommended start is to use 16×16 thread block (Total of 256 threads per block). Unlike in the previous kernel, the optimized kernel with shared memory has 2 loops: First one that loops across the tiles IE (Dimension / TILE) times and second one that loops across the shared memory. Note the __syncthreads() ,which are extremely important and maintain synchronicity across the thread block.

/******************************************************************************

*@brief Optimized Matrix Matrix Multiplication by utilization of Shared Memory

*****************************************************************************/

__global__ void MM_MUl_Optimized(int* a, int* b, int* c, int N,int Phases) {

// -----------------------------

// ------- Grid Indexing -------

unsigned int tx = threadIdx.x;

unsigned int ty = threadIdx.y;

unsigned int tix = tx + blockDim.x * blockIdx.x;

unsigned int tiy = ty + blockDim.y * blockIdx.y;

// ------------------------------------------------

// ------ Statically Declared Shared Memory -------

__shared__ float matrix_a[TILE][TILE];

__shared__ float matrix_b[TILE][TILE];

// ---------------------------------------

// ------- Check Processing Offset -------

if ((tix < N) & (tiy < N)) {

unsigned int Lindex = tiy * N + tix;

int sum = 0;

// --------------------------

// ------- Phase Loop -------

for (int i = 0; i < Phases; i++) {

// --------------------------------------------------

// ------- Load Phase Data into Shared Memory -------

matrix_a[ty][tx] = a[tiy * N + i * TILE + tx];

matrix_b[ty][tx] = b[(i * TILE + ty) * N + tix];

__syncthreads();

// -----------------------------------------

// ------- Loop Across Shared Memory -------

for (unsigned int j = 0; j < TILE; j++) {

int Temp1 = matrix_a[ty][j]; // Row

int Temp2 = matrix_b[j][tx]; // Col

sum += Temp1 * Temp2;

}

__syncthreads();

}

// ----------------------------------------

// ------- Save Data Back to Global -------

c[Lindex] = sum;

}

}Experts from nVidia will further tell you, that even this is not a well-written kernel and that you can still optimize it. That being said, its very hard to beat performance of the nVidia libraries (BLAS, FFT etc.) The goal was to show the way. If I will ever need to use MM multiplication,I will use BLAS, but things happen frequently, that you will want to implement custom functionality and its good to know how to improve it. On my GTX1060, I have measured the difference of those times as follows: 9.98ms / default and 4.22 ms / optimized. Thus giving 2x additional boost,which is quite handy ?. Note that I have used integers specifically as wanted to cross-check results against CPU implementation, which was utterly slow and I did not even measured it – cross checking floating point would be more complicated.

If you are more curious about this example and the underlying host-code along with the measurements of the time (CUDA Events), feel free to download ➡️ HERE ⬅️ and take a look yourself. Note that I was as usually lazy and expected both AxB matrices of the same sizes and further constrained to dimension size divisible by 16. As always I do highly recommend to have a “CUDA by example” book nearby for additional tricks and tips ☄️