Cuda FFT R2/R4/R8

When I was starting to learn with CUDA, our teacher told us to chooose an algorithm and implement it in CUDA for a semestral project. For me as beginner, everything seemed complicated as I had no idea how to code anything in CUDA. I would have probably even stuggled with parallel reduction algorithm, but I wanted to know how FFT works and so I asked him whether I could actually work on FFT. I received a negative answer back then that FFT might seem too easy as it has been coded countless times – I can somewhat agree with the last statement.

Nevertheless, I have chosen to implement Gaussian Blur of an Image instead as my semestral work – Which by the way was coded extremely badly and the operation was carried as a 2D convolution by definition. If you are not familiar with image/signal processing, I can assure you, that are ways of how to achieve the same more efficiently and easily. The only pros it had for me was, that it was a very good CUDA exercise ?.

When I have later on decided to code the FFT anyway for my bachelor’s work, it was a nightmare – basically all complex operations (Additions, substractions, divisions and multiplications) were implemented in polar form – basically the worst way it can be implemented due to excessive usage of sin() / cos() functions – which at that time was even worse due to the fact that they were computed by SFUs (Specifial Funtion Units) – which were quite rare on the GPU device.

To be honest, its not easy to get into FFT if you dont have any knowledge at all. Should you implement the mixed radix? split radix? Base radix? Which radix? R2/R4/R8? Should you choose DIT (Decimation in Time) or DIF (Decimation in Frequency)? Do you need to do digit/bit – reversal prior or after the FFT? What are twiddle factors? Is it necessary to compute them within each butterfly? What are the formulas for the butterflies? … Sometimes I think that people just forgot what is the purpose of the university.

In my understanding is it mostly to let the students get into touch with whatever they want as long as they got some devotion to do it and support them as long as the direction approximately matches the university program.

But Lets get back to the post: We will be doing R2/R4/R8 (All of these) FFTs in DIF form and as such, the digitreversal will be done at the end of the FFT. There are more ways of how to achieve the digitreversal, but the simpliest and most easily understandable form is to decompose the indexes into base form. For R4 (Below), the index for 7 is for example simply 1x41 + 3x40= [1 3]. Once we got the digits, we invert their order ([1,3] → [3,1]) and after that is done, we convert them back to decimal. ( 3x41 + 1x40 = 12 + 1 = 13):

Index 0 || Digits: [0 0] || Reversed: [0 0] || RevIndex: 0

Index 1 || Digits: [0 1] || Reversed: [1 0] || RevIndex: 4

Index 2 || Digits: [0 2] || Reversed: [2 0] || RevIndex: 8

Index 3 || Digits: [0 3] || Reversed: [3 0] || RevIndex: 12

Index 4 || Digits: [1 0] || Reversed: [0 1] || RevIndex: 1

Index 5 || Digits: [1 1] || Reversed: [1 1] || RevIndex: 5

Index 6 || Digits: [1 2] || Reversed: [2 1] || RevIndex: 9

Index 7 || Digits: [1 3] || Reversed: [3 1] || RevIndex: 13

Index 8 || Digits: [2 0] || Reversed: [0 2] || RevIndex: 2

Index 9 || Digits: [2 1] || Reversed: [1 2] || RevIndex: 6

Index 10 || Digits: [2 2] || Reversed: [2 2] || RevIndex: 10

Index 11 || Digits: [2 3] || Reversed: [3 2] || RevIndex: 14

Index 12 || Digits: [3 0] || Reversed: [0 3] || RevIndex: 3

Index 13 || Digits: [3 1] || Reversed: [1 3] || RevIndex: 7

Index 14 || Digits: [3 2] || Reversed: [2 3] || RevIndex: 11

Index 15 || Digits: [3 3] || Reversed: [3 3] || RevIndex: 15

As you can see, the digitreversal is quite simple. My original code used new/free C++ operators in CUDA kernel code, which is allowed, but in fact not necessary as each thread can be assigned a piece of the shared memory. By the way, do you know that you can use printf() from CUDA kernel code? I am not kidding, seriously ?… The only problem so far I found out with shared memory is that you have to pay extra attention to both – Its size and the kernel grid dimensions (or just thread block dimension). If you are starting to learn CUDA, I would usually recommend to skip integrating shared memory until you understand how to code in CUDA well enough. Using shared memory is in fact optimization of your algorithm and you cannot learn and optimize at the same time – or at least I highly do not recommend you to do that ?

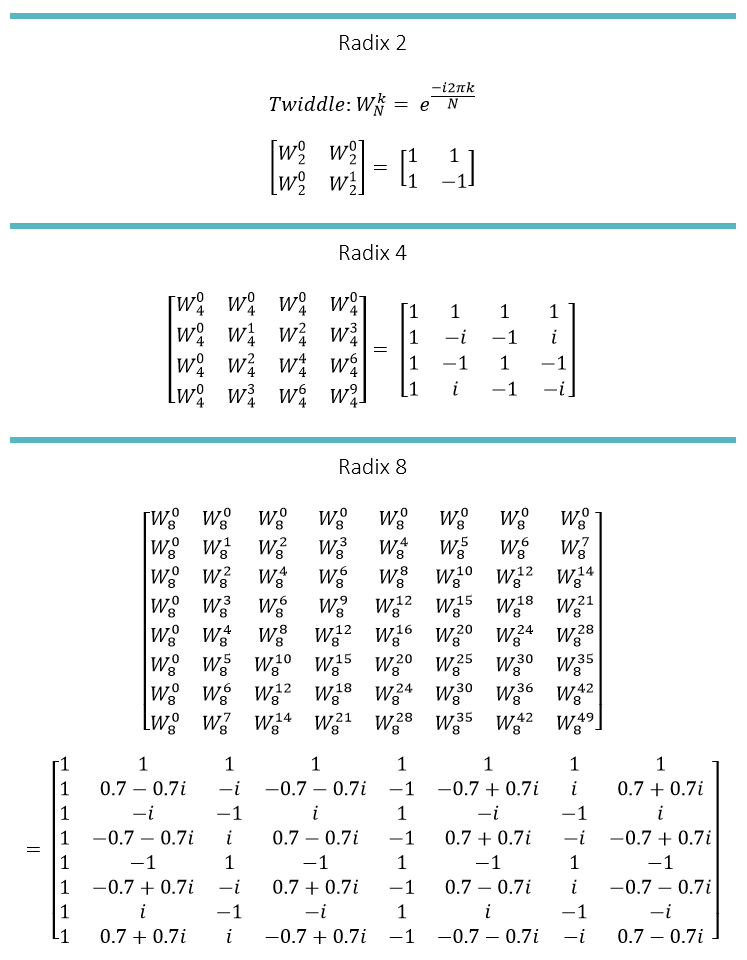

Below, you can find the semi-generic Matrix formula for FFT Butterflies as well as for the twiddles. Basically all of modern FFT implementations (Except for few of mine own) use Twiddle – precomputation and LUT (Look-Up-Table) – Its much more efficient that computing them during runtime. Experienced CUDA programmers are free to use texture/constant memory instead of global for this purpose.

One thing I didn’t mention so far is that although it might seem like a good idea to let multiple CUDA threads work on a single butterly (Especially for large butterflies), its actually quite a bad idea – don’t do that and completely forget about it. It greatly simplifies things to let each thread compute just a single FFT butterfly. A small reminder then that it takes 512 threads to compute R2 transform with a length of 1024 (Seems obvious, but ? … ). The higher radix one uses, the less computations are required (Its got its drawbacks – that why most implemntations stay with R2/R4/R8) a comparison of those radixes and their relative performance can be found for example HERE.

The entire FFT then basically boils down to “Just a few operations“:

- Butterflies (R2,R4,R8 … )

- Bit/Digit Reversal

- Twiddle Calculation

- Find proper Indexing to assign butterflies corresponding data along with correct twiddle factors.

- Example of Indexing for both Data and Twiddles can be found HERE for 64-Point R4 DIF FFT.

FFT Twiddle/Data Indexing is in fact the most tricky part of the algorithm. I don’t want to go into details here, but indexing basically requires integer divisions, bit-shifting (IE taking custom power of 2) and various modulo operations as well as tracking the currently processed stage of the FFT. This is much easier to look up in the source code, so please go ahead and download➡️ HERE ⬅️.

I have added as always a Matlab-Mex file to verify the results (I am quite used to do the verification myself). Note that each code can be simplified and optimized. I do always try to do “Reasonable best”. The project is for VS2019, CUDA 10.2 and by default uses CC 6.1 (GTX 1060). I do highly recommend to do FFTs via nVidia CUDA’s acceleration library CuFFT anyway.